Getting Creative with Generative Adversarial Networks

Oh no, grandpa started painting again

Lucy’s grandfather has been passionate about art all his life. When he had less grey hair and more teeth, he collected all of his paintbrushes and went out to paint some beautiful landscapes. In recent years grandpa’s eyes have deteriorated considerably, making it impossible to properly see the landscapes.

But grandpa is stubborn and wants to create paintings similar to those made by the great masters. He does not know what such paintings should look like but he happens to have a granddaughter – Lucy – who studies art and is very familiar with many masterpieces. Lucy must determine whether the paintings that her grandpa makes look like those made by the real great masters, or are fakes.

This analogy shows the basic idea of how a GAN works. Both grandpa and Lucy learn from the outcome to improve their future performance. As Lucy becomes better at detecting amateurish paintings, grandpa tries to become better at creating them.

Generation X and Z

Grandpa and Lucy can be seen as two networks called Generative Adversarial Networks (GANs). The first network (the “generator” or grandpa) tries to mimic this data, while the second one (the “discriminator” or Lucy) tries to distinguish between this output and the real data.

The generator is trained to create better images until the discriminator can no longer see the difference between the generated and original data. This set-up is actually a zero-sum non-cooperative game or minimax. Grandpa tries to win and so does Lucy, but they can’t both win. Typically the learning process reaches the famous Nash equilibrium from game theory.

A closer look

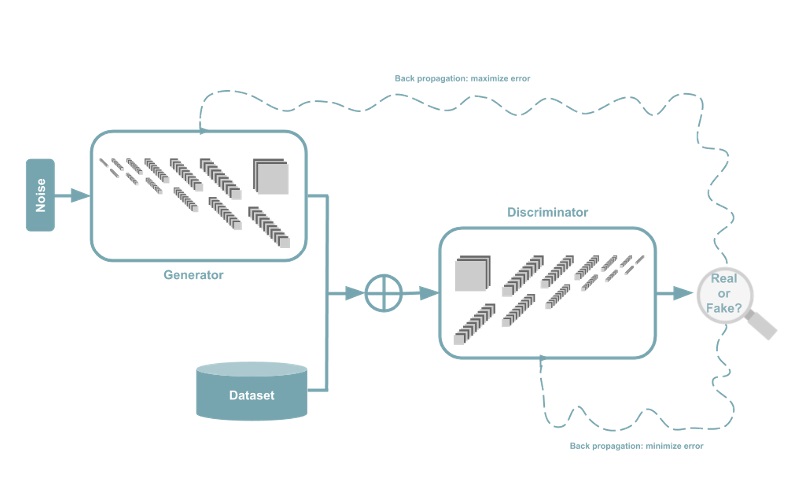

A configuration is created in which a model is trained to generate new samples. This is done by allowing the generating model to compete with another model that has been developed to recognize real data.

The configuration, therefore, consists of two models: a Generator and a Discriminator. Both are often neural networks. Imagine a dataset containing images of all kinds of paintings. As described above, two networks are trained: a Generator network that generates new images of paintings and a Discriminator network that must determine whether an image is generated by the Generator or comes from the data set with of real paintings.

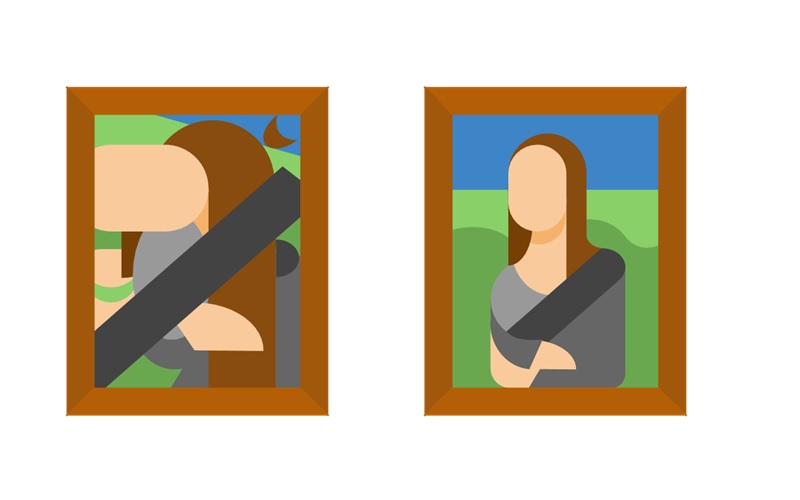

The Generator generates new samples and tries to make the Discriminator think that the generated samples come from the “true data set”. In this process, it is important that not only images of one painter (e.g. Monet) are generated but of all kinds of different painters. Otherwise, it becomes very easy for the Discriminator to recognize the newly generated samples. To achieve this required variation in paintings, the Generator receives random input. Because the network has not been trained at the beginning and images of paintings are quite specific, there is a good chance that the Generator will only generate noise-based images in the beginning. It takes many, many attempts before the network starts to produce output close to the desired output, in this case, a realistic-looking image of the Mona Lisa.

The Discriminator wants to distinguish real samples from generated ones. For this, the Discriminator assigns a value of 0 or 1 to each sample it receives 0 for generated samples, 1 for real samples. Remember that the generator only receives these values of 0 and 1 and has never seen the real samples.

A GAN has an optimal solution at the point where the Generator perfectly replicates the underlying distribution of real data. This means that the Generator has learned to create new paintings that look like they could have been painted by a famous artist.

Family feud

Like every family, Lucy and her grandpa sometimes argue. One of the typical issues with using GAN’s is a problem called mode collapse. This means that Grandpa has learned to recreate the Mona Lisa perfectly, but he no longer cares about reproducing other works. Every time Lucy visits, he shows her the exact same painting and Lucy is fooled every time. A lot of research has been presented in the last couple of years that tries to solve this problem.

Another common problem in training GAN’s is to let them converge. Vanishing gradients and diminishing discriminator feedback are challenges that aren’t always easy to solve.

It ain’t over yet

At the moment there is a lot of hype about GANs and they are one of the most interesting new concepts in AI in recent years. GANs can be used for many things such as the generation of music, art, images and videos. There is a ton of research going into GANs and you can expect many more cool and useful applications to be discovered.

Industrial applications can be found in design, where specialists can make use of computer programs that assist in the creative process or in image restoration such as super-resolution or inpainting.

Can’t you get enough of GANs or do you want to implement them into your business? Contact us! We’d love to answer any questions you may have.