A crash course into Natural Language Processing

What is natural language processing? (NLP)

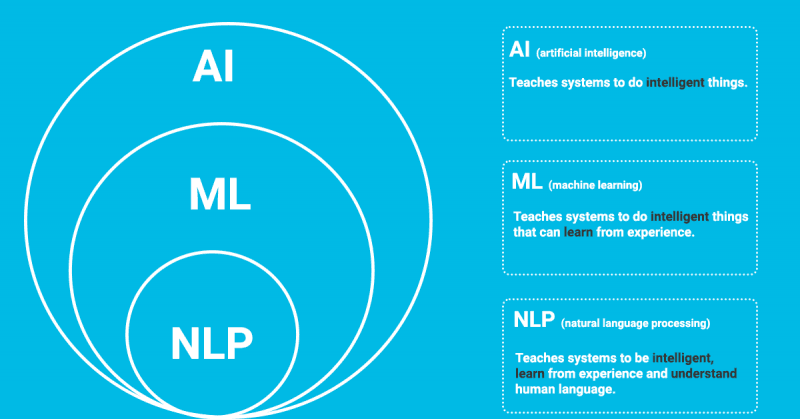

NLP stands for Natural Language Processing and it has grown into a fully-fledged branch of AI. In a nutshell, NLP makes it possible for computers to understand human language while still retaining the ability to process data at a much faster rate than humans.

While natural language processing isn’t a new science, the technology is rapidly advancing thanks to an increased interest in human-to-machine communications, plus an availability of big data, powerful computing and enhanced algorithms.

Why is NLP important?

Processing large volumes of textual data

Today’s machines can analyse more language-based data than humans, without needing a break and in a consistent, (and if constructed well) unbiased way. Considering the staggering amount of unstructured data that is generated every day, from medical records to social media, automation will be critical to fully analyse text and speech data efficiently.

The most well-known examples are Google Assistant, Siri and Alexa. People use Google translate every day for translating text in all kinds of different languages. Or the app Grammarly which helps people improve their writing skills by automatically detecting grammar and spelling mistakes.

Another well-known application of NLP are chatbots, where you can have a more natural conversation with a computer to solve issues. Your spam filter also uses NLP technology to keep unwanted promotional emails out of your inbox.

They are still far from perfect of course but the increase in accuracy has made huge leaps in the past decade.

Imagine the revelations of all the massive amounts of unstructured text you gather in your organisation and from your stakeholders. It may hold the key to the best strategy for your organisation ever.

Structuring a highly unstructured data source

We might not always be aware of it but we, as colleagues on the work floor, do not always communicate in a clear way. The way we chat with each other since the dawn of the internet hasn’t made it all that easier, reading through all the colloquialisms, abbreviations and misspellings. As frustratingly it is for us to keep track of all this different communication styles and information sources, imagine how it would be for a software program.

But with the help of NLP, computer programs made huge leaps to analyse and interpret human language and position them into an appropriate context.

A revolutionary approach

First, to automate these processes and deliver accurate responses, you’ll need machine learning. The ability to instruct computer programs to automatically learn from data.

This is where the expertise of ML2Grow comes into play.

To recap, machine learning is the process of applying algorithms that teach machines how to automatically learn and improve from experience without being explicitly programmed.

The first step is to know what the machine needs to learn. We create a learning framework and provide relevant and clean data for the machine to learn from. Input is key here. Garbage in, garbage out.

AI-powered chatbots, for example, use NLP to interpret what users say and what they intend to do, and machine learning to automatically deliver more accurate responses by learning from hundreds or thousands of past interactions.

Unlike a programmed tool that you can buy everywhere, a machine learning model can generalize and deal with novel cases. If the model stumbles on something it has seen before then it can use its prior learning to evaluate the case.

The big value for businesses lies here in the fact that NLP features can turn unstructured text into useable data and insights in no time and where the model continuously improve at the task you’ve set.

We can put any sort of text into our machine model: social media comments, online reviews, survey responses, even financial, medical, legal and regulatory documents.

How does it work?

In general terms, NLP tasks break down language into shorter, elemental pieces, try to understand relationships between the pieces and explore how the pieces work together to create meaning.

When you look at a chunk of text, there are always three important layers to analyse.

Semantic information

Semantic information is the specific meaning of an individual word. For example ‘crash’ can mean an auto accident, a drop in the stock market, attending a party without being invited or ocean waves hitting the shore.

Sentences and words can have many different interpretations, e.g. consider the sentence: “Greg’s ball cleared the fence.” Europeans would think that ‘the ball’ refers to a soccer ball, while most Americans might think of a baseball instead. Without NLP systems, a computer can’t process the context.

Syntax information

The second key component of text is a sentence or phrase structure, known as syntax information. In NLP, syntactic analysis is used to assess how the natural language aligns with the grammatical rules.

Take for example the sentence: “Although they were tired after the marathon, the cousins decided to go to a celebration at the park.” Who are ‘they’, the cousins or someone else?

Context information

And at last, we must understand the context of a word or sentence. What is the concept being discussed? “It was an idyllic day to walk in the park.” What is the meaning of ‘idyllic’ and why was it perfect to walk in the park? Probably because it was warm and sunny.

The analyses are key to understanding the grammatical structure of a text and identifying how words relate to each other in a given context. But, transforming text into something machines can process is complicated.

A brief overview of NLP tasks

Basic NLP preprocessing and learning tasks include tokenization and parsing, lemmatisation/stemming, part-of-speech tagging, language detection and identification of semantic relationships. If you ever diagrammed sentences in grade school, you’ve done these tasks manually before.

There are several techniques that can be used to ‘clean’ a dataset and make it more organized.

Part-of-speech tagging

Is the process of determining the part-of-speech (POS) of a particular word or piece of text based on its use and context. It describes the characteristic structure of lexical terms within a sentence or text, therefore, we can use them for making assumptions about the semantics. Other applications of POS tagging include:

Named entity recognition

Identifies words or phrases as useful entities. For example, NEM identifies ‘Ghent’ as a location and ‘Greg’ as a man’s name.

Co-reference resolution

Is the task of identifying if and when two words refer to the same entity. For example ‘he’ = ‘Greg’.

Speech recognition

Is the task of reliably converting voice data into text data. Speech recognition is required for any application that follows voice commands or answers spoken questions.

Word sense disambiguation

Is the selection of the meaning of a word with multiple meanings through a process of semantic analysis that determine the word that makes the most sense in the given context.

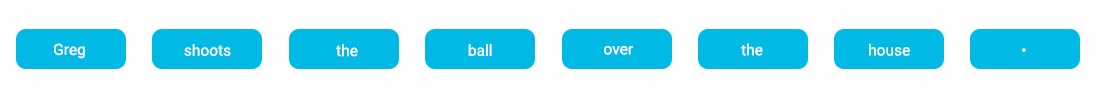

Tokenization

When a document is split into sentences, it’s easier to process them one at a time. The next step is to separate the sentence into separate words or tokens. This is why it’s called tokenization. It would look something like this:

Stop word removal

The next step could be the removal of common words that add little or no unique information, such as prepositions and articles (“over”, “the”).

Stemming

Refers to the process of slicing the end of the beginning of words with the intention of removing affixes and suffixes. The problem is that affixes can create or expand new forms of the same word. “Greg is helpful to bring the ball back to the playing ground”. Take for example “helpful”, “ful” is the suffix here attached at the end of the word.

We make the computer chop the part to the word “help”. We use this to correct spelling errors from tokens. This is not used as a grammar exercise for the computer but to improve the performance of our NLP model.

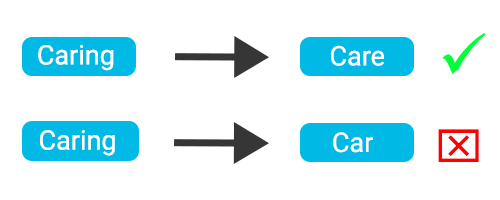

Lemmatisation

Unlike stemming, lemmatisation depends on correctly identifying the intended part of speech and the meaning of a word in a sentence.

For example, the words “shooting”, “shoots”,”shot”, are all part of the same verb. “Greg is shooting the ball” or “Greg shot the ball” means the same for us but in a different conjugation. For a computer, it is helpful to know the base form of each word so that you know that both sentences are talking about the same concept. So the base form of the verb is “shoot”.

Lemmatisation is figuring out the most basic form or lemma of each word in the sentence. In our example, it’s finding the root form of our verb “shooting”.

Example of lemmatisation (care) vs stemming (car).

NLP libraries

Natural language processing is one of the most complex fields within artificial intelligence. But doing the above manually would be sheer impossible. There are many NLP libraries available that is doing all that hard work so that our machine learning engineers can focus on making the models work perfectly than wasting time on sentiment analysis or keyword extraction.

Next time we will discuss some of the most popular open-source NLP libraries our team uses and why a custom-made solution can be an alternative to off-the-shelve solutions.